Overview

Welcome to The Burn Book 👋

This book will help you get started with the Burn deep learning framework, whether you are an advanced user or a beginner. We have crafted some sections for you:

-

Basic Workflow: From Training to Inference: We'll start with the fundamentals, guiding you through the entire workflow, from training your models to deploying them for inference. This section lays the groundwork for your Burn expertise.

-

Building Blocks: Dive deeper into Burn's core components, understanding how they fit together. This knowledge forms the basis for more advanced usage and customization.

-

Saving & Loading Models: Learn how to easily save and load your trained models.

-

Custom Training Loop: Gain the power to customize your training loops, fine-tuning your models to meet your specific requirements. This section empowers you to harness Burn's flexibility to its fullest.

-

Importing Models: Learn how to import ONNX and PyTorch models, expanding your compatibility with other deep learning ecosystems.

-

Advanced: Finally, venture into advanced topics, exploring Burn's capabilities at their peak. This section caters to those who want to push the boundaries of what's possible with Burn.

Throughout the book, we assume a basic understanding of deep learning concepts, but we may refer to additional material when it seems appropriate.

Why Burn?

Why bother with the effort of creating an entirely new deep learning framework from scratch when PyTorch, TensorFlow, and other frameworks already exist? Spoiler alert: Burn isn't merely a replication of PyTorch or TensorFlow in Rust. It represents a novel approach, placing significant emphasis on making the right compromises in the right areas to facilitate exceptional flexibility, high performance, and a seamless developer experience. Burn isn’t a framework specialized for only one type of application, it is designed to serve as a versatile framework suitable for a wide range of research and production uses. The foundation of Burn's design revolves around three key user profiles:

Machine Learning Researchers require tools to construct and execute experiments efficiently. It’s essential for them to iterate quickly on their ideas and design testable experiments which can help them discover new findings. The framework should facilitate the swift implementation of cutting-edge research while ensuring fast execution for testing.

Machine Learning Engineers are another important demographic to keep in mind. Their focus leans less on swift implementation and more on establishing robustness, seamless deployment, and cost-effective operations. They seek dependable, economical models capable of achieving objectives without excessive expense. The whole machine learning workflow —from training to inference— must be as efficient as possible with minimal unpredictable behavior.

Low level Software Engineers working with hardware vendors want their processing units to run models as fast as possible to gain competitive advantage. This endeavor involves harnessing hardware-specific features such as Tensor Core for Nvidia. Since they are mostly working at a system level, they want to have absolute control over how the computation will be executed.

The goal of Burn is to satisfy all of those personas!

Getting Started

Burn is a deep learning framework in the Rust programming language. Therefore, it goes without saying that one must understand the basic notions of Rust. Reading the first chapters of the Rust Book is recommended, but don't worry if you're just starting out. We'll try to provide as much context and reference to external resources when required. Just look out for the 🦀 Rust Note indicators.

Installing Rust

For installation instructions, please refer to the installation page. It explains in details the most convenient way for you to install Rust on your computer, which is the very first thing to do to start using Burn.

Creating a Burn application

Once Rust is correctly installed, create a new Rust application by using Rust's build system and package manager Cargo. It is automatically installed with Rust.

🦀 Cargo Cheat Sheet

Cargo is a very useful tool to manage Rust projects because it handles a lot of tasks. More precisely, it is used to compile your code, download the libraries/packages your code depends on, and build said libraries.

Below is a quick cheat sheet of the main cargo commands you might use throughout this guide.

| Command | Description |

|---|---|

cargo new path | Create a new Cargo package in the given directory. |

cargo add crate | Add dependencies to the Cargo.toml manifest file. |

cargo build | Compile the local package and all of its dependencies (in debug mode, use -r for release). |

cargo check | Check the local package for compilation errors (much faster). |

cargo run | Run the local package binary. |

For more information, check out Hello, Cargo! in the Rust Book.

In the directory of your choice, run the following:

cargo new my_burn_app

This will initialize the my_burn_app project directory with a Cargo.toml file a a src

directory with an auto-generated main.rs file inside. Head inside the directory to check:

cd my_burn_app

Then, add Burn as a dependency:

cargo add burn --features wgpu

Finally, compile the local package by executing the following:

cargo build

That's it, you're ready to start! You have a project configured with Burn and the WGPU backend, which allows to execute low-level operations on any platform using the GPU.

Writing a code snippet

The src/main.rs was automatically generated by Cargo, so let's replace its content with the

following:

use burn::tensor::Tensor;

use burn::backend::Wgpu;

// Type alias for the backend to use.

type Backend = Wgpu;

fn main() {

let device = Default::default();

// Creation of two tensors, the first with explicit values and the second one with ones, with the same shape as the first

let tensor_1 = Tensor::<Backend, 2>::from_data([[2., 3.], [4., 5.]], &device);

let tensor_2 = Tensor::<Backend, 2>::ones_like(&tensor_1);

// Print the element-wise addition (done with the WGPU backend) of the two tensors.

println!("{}", tensor_1 + tensor_2);

}🦀 Use Declarations

To bring any of the Burn module or item into scope, a use declaration is added.

In the example above, we wanted bring the Tensor struct and Wgpu backend into scope with the

following:

use burn::tensor::Tensor;

use burn::backend::Wgpu;This is pretty self-explanatory in this case. But, the same declaration could be written as a shortcut to simultaneously binding of multiple paths with a common prefix:

use burn::{tensor::Tensor, backend::backend::Wgpu};In this example, the common prefix is pretty short and there are only two items to bind locally.

Therefore, the first usage with two use declarations might be preferred. But know that both

examples are valid. For more details on the use keyword, take a look at

this section

of the Rust Book or the

Rust reference.

🦀 Generic Data Types

If you're new to Rust, you're probably wondering why we had to use Tensor::<Backend, 2>::....

That's because the Tensor struct is generic

over multiple concrete data types. More specifically, a Tensor can be used for 3 generic

parameters: a Tensor struct has 3 generic arguments: the backend, the number of dimensions (rank)

and the data type (defaults to Float). Here, we only specify the backend and number of dimensions

since a Float tensor is used by default. For more details on the Tensor struct, take a look at

this section.

Most of the time when generics are involved, the compiler can infer the generic parameters

automatically. In this case, the compiler needs a little help. This can usually be done in one of

two ways: providing a type annotation or binding the gereneric parameter via the turbofish ::<>

syntax. In the example we used the so-called turbofish syntax, but we could have used type

annotations instead.

let tensor_1: Tensor<Backend, 2> = Tensor::from_data([[2., 3.], [4., 5.]]);

let tensor_2 = Tensor::ones_like(&tensor_1);You probably noticed that we provided a type annotation for the first tensor, yet it still worked.

That's because the compiler (correctly) inferred that tensor_2 had the same generic parameters.

The same could have been done in the original example, but specifying the parameters for both is

more explicit.

By running cargo run, you should now see the result of the addition:

Tensor {

data:

[[3.0, 4.0],

[5.0, 6.0]],

shape: [2, 2],

device: BestAvailable,

backend: "wgpu",

kind: "Float",

dtype: "f32",

}

While the previous example is somewhat trivial, the upcoming basic workflow section will walk you through a much more relevant example for deep learning applications.

Using prelude

Burn comes with a variety of things in its core library. When creating a new model or using an existing one for inference, you may need to import every single component you used, which could be a little verbose.

To address it, a prelude module is provided, allowing you to easily import commonly used structs and macros as a group:

use burn::prelude::*;which is equal to:

use burn::{

config::Config,

module::Module,

nn,

tensor::{

backend::Backend, Bool, Data, Device, ElementConversion, Float, Int, Shape, Tensor,

},

};For the sake of simplicity, the subsequent chapters of this book will all use this form of importing. However, this does not include the content in the Building Blocks chapter, as explicit importing aids users in grasping the usage of particular structures and macros.

Explore examples

In the next chapter you'll have the opportunity to implement the whole Burn

guide example yourself in a step by step manner.

Many additional Burn examples are available in the examples directory. Burn examples are organized as library crates with one or more examples that are executable binaries. An example can then be executed using the following cargo command line in the root of the Burn repository:

cargo run --example <example name>

To learn more about crates and examples, read the Rust section below.

🦀 About Rust crates

Each Burn example is a package which are subdirectories of the examples directory. A package

is composed of one or more crates.

A package is a bundle of one or more crates that provides a set of functionality. A package

contains a Cargo.toml file that describes how to build those crates.

A crate is a compilation unit in Rust. It could be a single file, but it is often easier to split up crates into multiple modules.

A module lets us organize code within a crate for readability and easy reuse. Modules also allow

us to control the privacy of items. For instance the pub(crate) keyword is employed to make

a module publicly available inside the crate. In the snippet below there are four modules declared,

two of them are public and visible to the users of the crates, one of them is public inside the crate

only and crate users cannot see it, at last one is private when there is no keyword.

These modules can be single files or a directory with a mod.rs file inside.

pub mod data;

pub mod inference;

pub(crate) mod model;

mod training;A crate can come in one of two forms: a binary crate or a library crate. When compiling a crate,

the compiler first looks in the crate root file (src/lib.rs for a library crate and src/main.rs

for a binary crate). Any module declared in the crate root file will be inserted in the crate for

compilation.

All Burn examples are library crates and they can contain one or more executable examples that uses the library. We even have some Burn examples that uses the library crate of other examples.

The examples are unique files under the examples directory. Each file produces an executable file

with the same name. Each example can then be executed with cargo run --example <executable name>.

Below is an file tree of a typical Burn example package:

examples/burn-example

├── Cargo.toml

├── examples

│ ├── example1.rs

│ ├── example2.rs

│ └── ...

└── src

├── lib.rs

├── module1.rs

├── module2.rs

└── ...

For more information on each example, see their respective README.md file.

Note that some examples use the

datasets library by HuggingFace to download the

datasets required in the examples. This is a Python library, which means that you will need to

install Python before running these examples. This requirement will be clearly indicated in the

example's README when applicable.

Guide

This guide will walk you through the process of creating a custom model built with Burn. We will train a simple convolutional neural network model on the MNIST dataset and prepare it for inference.

For clarity, we sometimes omit imports in our code snippets. For more details, please refer to the

corresponding code in the examples/guide directory.

We reproduce this example in a step-by-step fashion, from dataset creation to modeling and training

in the following sections. It is recommended to use the capabilities of your IDE or text editor to

automatically add the missing imports as you add the code snippets to your code.

Be sure to checkout the git branch corresponding to the version of Burn you are using to follow this guide.

The current version of Burn is 0.13.2 and the corresponding branch to checkout is release/0.13.

The code for this demo can be executed from Burn's base directory using the command:

cargo run --example guide

Key Learnings

- Creating a project

- Creating neural network models

- Importing and preparing datasets

- Training models on data

- Choosing a backend

- Using a model for inference

Model

The first step is to create a project and add the different Burn dependencies. Start by creating a new project with Cargo:

cargo new my-first-burn-model

As mentioned previously, this will initialize

your my-first-burn-model project directory with a Cargo.toml and a src/main.rs file.

In the Cargo.toml file, add the burn dependency with train, wgpu and vision features.

Then run cargo build to build the project and import all the dependencies.

[package]

name = "my-first-burn-model"

version = "0.1.0"

edition = "2021"

[dependencies]

burn = { version = "0.13.2", features = ["train", "wgpu", "vision"] }

Our goal will be to create a basic convolutional neural network used for image classification. We will keep the model simple by using two convolution layers followed by two linear layers, some pooling and ReLU activations. We will also use dropout to improve training performance.

Let us start by defining our model struct in a new file src/model.rs.

use burn::{

nn::{

conv::{Conv2d, Conv2dConfig},

pool::{AdaptiveAvgPool2d, AdaptiveAvgPool2dConfig},

Dropout, DropoutConfig, Linear, LinearConfig, Relu,

},

prelude::*,

};

#[derive(Module, Debug)]

pub struct Model<B: Backend> {

conv1: Conv2d<B>,

conv2: Conv2d<B>,

pool: AdaptiveAvgPool2d,

dropout: Dropout,

linear1: Linear<B>,

linear2: Linear<B>,

activation: Relu,

}There are two major things going on in this code sample.

-

You can create a deep learning module with the

#[derive(Module)]attribute on top of a struct. This will generate the necessary code so that the struct implements theModuletrait. This trait will make your module both trainable and (de)serializable while adding related functionalities. Like other attributes often used in Rust, such asClone,PartialEqorDebug, each field within the struct must also implement theModuletrait.🦀 Trait

Traits are a powerful and flexible Rust language feature. They provide a way to define shared behavior for a particular type, which can be shared with other types.

A type's behavior consists of the methods called on that type. Since all

Modules should implement the same functionality, it is defined as a trait. Implementing a trait on a particular type usually requires the user to implement the defined behaviors of the trait for their types, though that is not the case here as explained above with thederiveattribute. Check out the explainer below to learn why.For more details on traits, take a look at the associated chapter in the Rust Book.

🦀 Derive Macro

The

deriveattribute allows traits to be implemented easily by generating code that will implement a trait with its own default implementation on the type that was annotated with thederivesyntax.This is accomplished through a feature of Rust called procedural macros, which allow us to run code at compile time that operates over Rust syntax, both consuming and producing Rust syntax. Using the attribute

#[my_macro], you can effectively extend the provided code. You will see that the derive macro is very frequently employed to recursively implement traits, where the implementation consists of the composition of all fields.In this example, we want to derive the

ModuleandDebugtraits.#[derive(Module, Debug)] pub struct MyCustomModule<B: Backend> { linear1: Linear<B>, linear2: Linear<B>, activation: Relu, }The basic

Debugimplementation is provided by the compiler to format a value using the{:?}formatter. For ease of use, theModuletrait implementation is automatically handled by Burn so you don't have to do anything special. It essentially acts as parameter container.For more details on derivable traits, take a look at the Rust appendix, reference or example.

-

Note that the struct is generic over the

Backendtrait. The backend trait abstracts the underlying low level implementations of tensor operations, allowing your new model to run on any backend. Contrary to other frameworks, the backend abstraction isn't determined by a compilation flag or a device type. This is important because you can extend the functionalities of a specific backend (see backend extension section), and it allows for an innovative autodiff system. You can also change backend during runtime, for instance to compute training metrics on a cpu backend while using a gpu one only to train the model. In our example, the backend in use will be determined later on.🦀 Trait Bounds

Trait bounds provide a way for generic items to restrict which types are used as their parameters. The trait bounds stipulate what functionality a type implements. Therefore, bounding restricts the generic to types that conform to the bounds. It also allows generic instances to access the methods of traits specified in the bounds.

For a simple but concrete example, check out the Rust By Example on bounds.

In Burn, the

Backendtrait enables you to run tensor operations using different implementations as it abstracts tensor, device and element types. The getting started example illustrates the advantage of having a simple API that works for different backend implementations. While it used the WGPU backend, you could easily swap it with any other supported backend.// Choose from any of the supported backends. // type Backend = Candle<f32, i64>; // type Backend = LibTorch<f32>; // type Backend = NdArray<f32>; type Backend = Wgpu; // Creation of two tensors. let tensor_1 = Tensor::<Backend, 2>::from_data([[2., 3.], [4., 5.]], &device); let tensor_2 = Tensor::<Backend, 2>::ones_like(&tensor_1); // Print the element-wise addition (done with the selected backend) of the two tensors. println!("{}", tensor_1 + tensor_2);For more details on trait bounds, check out the Rust trait bound section or reference.

Note that each time you create a new file in the src directory you also need to add explicitly this

module to the main.rs file. For instance after creating the model.rs, you need to add the following

at the top of the main file:

mod model;Next, we need to instantiate the model for training.

#[derive(Config, Debug)]

pub struct ModelConfig {

num_classes: usize,

hidden_size: usize,

#[config(default = "0.5")]

dropout: f64,

}

impl ModelConfig {

/// Returns the initialized model.

pub fn init<B: Backend>(&self, device: &B::Device) -> Model<B> {

Model {

conv1: Conv2dConfig::new([1, 8], [3, 3]).init(device),

conv2: Conv2dConfig::new([8, 16], [3, 3]).init(device),

pool: AdaptiveAvgPool2dConfig::new([8, 8]).init(),

activation: Relu::new(),

linear1: LinearConfig::new(16 * 8 * 8, self.hidden_size).init(device),

linear2: LinearConfig::new(self.hidden_size, self.num_classes).init(device),

dropout: DropoutConfig::new(self.dropout).init(),

}

}

}🦀 References

In the previous example, the init() method signature uses & to indicate that the parameter types

are references: &self, a reference to the current receiver (ModelConfig), and

device: &B::Device, a reference to the backend device.

pub fn init<B: Backend>(&self, device: &B::Device) -> Model<B> {

Model {

// ...

}

}References in Rust allow us to point to a resource to access its data without owning it. The idea of ownership is quite core to Rust and is worth reading up on.

In a language like C, memory management is explicit and up to the programmer, which means it is easy to make mistakes. In a language like Java or Python, memory management is automatic with the help of a garbage collector. This is very safe and straightforward, but also incurs a runtime cost.

In Rust, memory management is rather unique. Aside from simple types that implement

Copy (e.g.,

primitives like integers, floats,

booleans and char), every value is owned by some variable called the owner. Ownership can be

transferred from one variable to another and sometimes a value can be borrowed. Once the owner

variable goes out of scope, the value is dropped, which means that any memory it allocated can be

freed safely.

Because the method does not own the self and device variables, the values the references point

to will not be dropped when the reference stops being used (i.e., the scope of the method).

For more information on references and borrowing, be sure to read the corresponding chapter in the Rust Book.

When creating a custom neural network module, it is often a good idea to create a config alongside

the model struct. This allows you to define default values for your network, thanks to the Config

attribute. The benefit of this attribute is that it makes the configuration serializable, enabling

you to painlessly save your model hyperparameters, enhancing your experimentation process. Note that

a constructor will automatically be generated for your configuration, which will take as input

values for the parameter which do not have default values:

let config = ModelConfig::new(num_classes, hidden_size);. The default values can be overridden

easily with builder-like methods: (e.g config.with_dropout(0.2);)

The first implementation block is related to the initialization method. As we can see, all fields are set using the configuration of the corresponding neural network underlying module. In this specific case, we have chosen to expand the tensor channels from 1 to 8 with the first layer, then from 8 to 16 with the second layer, using a kernel size of 3 on all dimensions. We also use the adaptive average pooling module to reduce the dimensionality of the images to an 8 by 8 matrix, which we will flatten in the forward pass to have a 1024 (16 _ 8 _ 8) resulting tensor.

Now let's see how the forward pass is defined.

impl<B: Backend> Model<B> {

/// # Shapes

/// - Images [batch_size, height, width]

/// - Output [batch_size, num_classes]

pub fn forward(&self, images: Tensor<B, 3>) -> Tensor<B, 2> {

let [batch_size, height, width] = images.dims();

// Create a channel at the second dimension.

let x = images.reshape([batch_size, 1, height, width]);

let x = self.conv1.forward(x); // [batch_size, 8, _, _]

let x = self.dropout.forward(x);

let x = self.conv2.forward(x); // [batch_size, 16, _, _]

let x = self.dropout.forward(x);

let x = self.activation.forward(x);

let x = self.pool.forward(x); // [batch_size, 16, 8, 8]

let x = x.reshape([batch_size, 16 * 8 * 8]);

let x = self.linear1.forward(x);

let x = self.dropout.forward(x);

let x = self.activation.forward(x);

self.linear2.forward(x) // [batch_size, num_classes]

}

}For former PyTorch users, this might feel very intuitive, as each module is directly incorporated

into the code using an eager API. Note that no abstraction is imposed for the forward method. You

are free to define multiple forward functions with the names of your liking. Most of the neural

network modules already built with Burn use the forward nomenclature, simply because it is

standard in the field.

Similar to neural network modules, the Tensor struct given as a

parameter also takes the Backend trait as a generic argument, alongside its dimensionality. Even if it is not

used in this specific example, it is possible to add the kind of the tensor as a third generic

argument. For example, a 3-dimensional Tensor of different data types(float, int, bool) would be defined as following:

Tensor<B, 3> // Float tensor (default)

Tensor<B, 3, Float> // Float tensor (explicit)

Tensor<B, 3, Int> // Int tensor

Tensor<B, 3, Bool> // Bool tensorNote that the specific element type, such as f16, f32 and the likes, will be defined later with

the backend.

Data

Typically, one trains a model on some dataset. Burn provides a library of very useful dataset

sources and transformations, such as Hugging Face dataset utilities that allow to download and store

data into an SQLite database for extremely efficient data streaming and storage. For this guide

though, we will use the MNIST dataset from burn::data::dataset::vision which requires no external

dependency.

To iterate over a dataset efficiently, we will define a struct which will implement the Batcher

trait. The goal of a batcher is to map individual dataset items into a batched tensor that can be

used as input to our previously defined model.

Let us start by defining our dataset functionalities in a file src/data.rs. We shall omit some of

the imports for brevity, but the full code for following this guide can be found at

examples/guide/ directory.

use burn::{

data::{dataloader::batcher::Batcher, dataset::vision::MnistItem},

prelude::*,

};

#[derive(Clone)]

pub struct MnistBatcher<B: Backend> {

device: B::Device,

}

impl<B: Backend> MnistBatcher<B> {

pub fn new(device: B::Device) -> Self {

Self { device }

}

}

This codeblock defines a batcher struct with the device in which the tensor should be sent before

being passed to the model. Note that the device is an associative type of the Backend trait since

not all backends expose the same devices. As an example, the Libtorch-based backend exposes

Cuda(gpu_index), Cpu, Vulkan and Metal devices, while the ndarray backend only exposes the

Cpu device.

Next, we need to actually implement the batching logic.

#[derive(Clone, Debug)]

pub struct MnistBatch<B: Backend> {

pub images: Tensor<B, 3>,

pub targets: Tensor<B, 1, Int>,

}

impl<B: Backend> Batcher<MnistItem, MnistBatch<B>> for MnistBatcher<B> {

fn batch(&self, items: Vec<MnistItem>) -> MnistBatch<B> {

let images = items

.iter()

.map(|item| Data::<f32, 2>::from(item.image))

.map(|data| Tensor::<B, 2>::from_data(data.convert(), &self.device))

.map(|tensor| tensor.reshape([1, 28, 28]))

// Normalize: make between [0,1] and make the mean=0 and std=1

// values mean=0.1307,std=0.3081 are from the PyTorch MNIST example

// https://github.com/pytorch/examples/blob/54f4572509891883a947411fd7239237dd2a39c3/mnist/main.py#L122

.map(|tensor| ((tensor / 255) - 0.1307) / 0.3081)

.collect();

let targets = items

.iter()

.map(|item| Tensor::<B, 1, Int>::from_data(

Data::from([(item.label as i64).elem()]),

&self.device

))

.collect();

let images = Tensor::cat(images, 0).to_device(&self.device);

let targets = Tensor::cat(targets, 0).to_device(&self.device);

MnistBatch { images, targets }

}

}🦀 Iterators and Closures

The iterator pattern allows you to perform some tasks on a sequence of items in turn.

In this example, an iterator is created over the MnistItems in the vector items by calling the

iter method.

Iterator adaptors are methods defined on the Iterator trait that produce different iterators by

changing some aspect of the original iterator. Here, the map method is called in a chain to

transform the original data before consuming the final iterator with collect to obtain the

images and targets vectors. Both vectors are then concatenated into a single tensor for the

current batch.

You probably noticed that each call to map is different, as it defines a function to execute on

the iterator items at each step. These anonymous functions are called

closures in Rust. They're easy to

recognize due to their syntax which uses vertical bars ||. The vertical bars capture the input

variables (if applicable) while the rest of the expression defines the function to execute.

If we go back to the example, we can break down and comment the expression used to process the images.

let images = items // take items Vec<MnistItem>

.iter() // create an iterator over it

.map(|item| Data::<f32, 2>::from(item.image)) // for each item, convert the image to float32 data struct

.map(|data| Tensor::<B, 2>::from_data(data.convert(), &self.device)) // for each data struct, create a tensor on the device

.map(|tensor| tensor.reshape([1, 28, 28])) // for each tensor, reshape to the image dimensions [C, H, W]

.map(|tensor| ((tensor / 255) - 0.1307) / 0.3081) // for each image tensor, apply normalization

.collect(); // consume the resulting iterator & collect the values into a new vectorFor more information on iterators and closures, be sure to check out the corresponding chapter in the Rust Book.

In the previous example, we implement the Batcher trait with a list of MnistItem as input and a

single MnistBatch as output. The batch contains the images in the form of a 3D tensor, along with

a targets tensor that contains the indexes of the correct digit class. The first step is to parse

the image array into a Data struct. Burn provides the Data struct to encapsulate tensor storage

information without being specific for a backend. When creating a tensor from data, we often need to

convert the data precision to the current backend in use. This can be done with the .convert()

method. While importing the burn::tensor::ElementConversion trait, you can call .elem() on a

specific number to convert it to the current backend element type in use.

Training

We are now ready to write the necessary code to train our model on the MNIST dataset. We shall

define the code for this training section in the file: src/training.rs.

Instead of a simple tensor, the model should output an item that can be understood by the learner, a struct whose responsibility is to apply an optimizer to the model. The output struct is used for all metrics calculated during the training. Therefore it should include all the necessary information to calculate any metric that you want for a task.

Burn provides two basic output types: ClassificationOutput and RegressionOutput. They implement

the necessary trait to be used with metrics. It is possible to create your own item, but it is

beyond the scope of this guide.

Since the MNIST task is a classification problem, we will use the ClassificationOutput type.

impl<B: Backend> Model<B> {

pub fn forward_classification(

&self,

images: Tensor<B, 3>,

targets: Tensor<B, 1, Int>,

) -> ClassificationOutput<B> {

let output = self.forward(images);

let loss = CrossEntropyLoss::new(None, &output.device()).forward(output.clone(), targets.clone());

ClassificationOutput::new(loss, output, targets)

}

}As evident from the preceding code block, we employ the cross-entropy loss module for loss calculation, without the inclusion of any padding token. We then return the classification output containing the loss, the output tensor with all logits and the targets.

Please take note that tensor operations receive owned tensors as input. For reusing a tensor

multiple times, you need to use the clone() function. There's no need to worry; this process won't

involve actual copying of the tensor data. Instead, it will simply indicate that the tensor is

employed in multiple instances, implying that certain operations won't be performed in place. In

summary, our API has been designed with owned tensors to optimize performance.

Moving forward, we will proceed with the implementation of both the training and validation steps for our model.

impl<B: AutodiffBackend> TrainStep<MnistBatch<B>, ClassificationOutput<B>> for Model<B> {

fn step(&self, batch: MnistBatch<B>) -> TrainOutput<ClassificationOutput<B>> {

let item = self.forward_classification(batch.images, batch.targets);

TrainOutput::new(self, item.loss.backward(), item)

}

}

impl<B: Backend> ValidStep<MnistBatch<B>, ClassificationOutput<B>> for Model<B> {

fn step(&self, batch: MnistBatch<B>) -> ClassificationOutput<B> {

self.forward_classification(batch.images, batch.targets)

}

}Here we define the input and output types as generic arguments in the TrainStep and ValidStep.

We will call them MnistBatch and ClassificationOutput. In the training step, the computation of

gradients is straightforward, necessitating a simple invocation of backward() on the loss. Note

that contrary to PyTorch, gradients are not stored alongside each tensor parameter, but are rather

returned by the backward pass, as such: let gradients = loss.backward();. The gradient of a

parameter can be obtained with the grad function: let grad = tensor.grad(&gradients);. Although it

is not necessary when using the learner struct and the optimizers, it can prove to be quite useful

when debugging or writing custom training loops. One of the differences between the training and the

validation steps is that the former requires the backend to implement AutodiffBackend and not just

Backend. Otherwise, the backward function is not available, as the backend does not support

autodiff. We will see later how to create a backend with autodiff support.

🦀 Generic Type Constraints in Method Definitions

Although generic data types, trait and trait bounds were already introduced in previous sections of this guide, the previous code snippet might be a lot to take in at first.

In the example above, we implement the TrainStep and ValidStep trait for our Model struct,

which is generic over the Backend trait as has been covered before. These traits are provided by

burn::train and define a common step method that should be implemented for all structs. Since

the trait is generic over the input and output types, the trait implementation must specify the

concrete types used. This is where the additional type constraints appear

<MnistBatch<B>, ClassificationOutput<B>>. As we saw previously, the concrete input type for the

batch is MnistBatch, and the output of the forward pass is ClassificationOutput. The step

method signature matches the concrete input and output types.

For more details specific to constraints on generic types when defining methods, take a look at this section of the Rust Book.

Let us move on to establishing the practical training configuration.

#[derive(Config)]

pub struct TrainingConfig {

pub model: ModelConfig,

pub optimizer: AdamConfig,

#[config(default = 10)]

pub num_epochs: usize,

#[config(default = 64)]

pub batch_size: usize,

#[config(default = 4)]

pub num_workers: usize,

#[config(default = 42)]

pub seed: u64,

#[config(default = 1.0e-4)]

pub learning_rate: f64,

}

fn create_artifact_dir(artifact_dir: &str) {

// Remove existing artifacts before to get an accurate learner summary

std::fs::remove_dir_all(artifact_dir).ok();

std::fs::create_dir_all(artifact_dir).ok();

}

pub fn train<B: AutodiffBackend>(artifact_dir: &str, config: TrainingConfig, device: B::Device) {

create_artifact_dir(artifact_dir);

config

.save(format!("{artifact_dir}/config.json"))

.expect("Config should be saved successfully");

B::seed(config.seed);

let batcher_train = MnistBatcher::<B>::new(device.clone());

let batcher_valid = MnistBatcher::<B::InnerBackend>::new(device.clone());

let dataloader_train = DataLoaderBuilder::new(batcher_train)

.batch_size(config.batch_size)

.shuffle(config.seed)

.num_workers(config.num_workers)

.build(MnistDataset::train());

let dataloader_test = DataLoaderBuilder::new(batcher_valid)

.batch_size(config.batch_size)

.shuffle(config.seed)

.num_workers(config.num_workers)

.build(MnistDataset::test());

let learner = LearnerBuilder::new(artifact_dir)

.metric_train_numeric(AccuracyMetric::new())

.metric_valid_numeric(AccuracyMetric::new())

.metric_train_numeric(LossMetric::new())

.metric_valid_numeric(LossMetric::new())

.with_file_checkpointer(CompactRecorder::new())

.devices(vec![device.clone()])

.num_epochs(config.num_epochs)

.summary()

.build(

config.model.init::<B>(&device),

config.optimizer.init(),

config.learning_rate,

);

let model_trained = learner.fit(dataloader_train, dataloader_test);

model_trained

.save_file(format!("{artifact_dir}/model"), &CompactRecorder::new())

.expect("Trained model should be saved successfully");

}It is a good practice to use the Config derive to create the experiment configuration. In the

train function, the first thing we are doing is making sure the artifact_dir exists, using the

standard rust library for file manipulation. All checkpoints, logging and metrics will be stored

under this directory. We then initialize our dataloaders using our previously created batcher. Since

no automatic differentiation is needed during the validation phase, the backend used for the

corresponding batcher is B::InnerBackend (see Backend). The autodiff capabilities

are available through a type system, making it nearly impossible to forget to deactivate gradient

calculation.

Next, we create our learner with the accuracy and loss metric on both training and validation steps

along with the device and the epoch. We also configure the checkpointer using the CompactRecorder

to indicate how weights should be stored. This struct implements the Recorder trait, which makes

it capable of saving records for persistency.

We then build the learner with the model, the optimizer and the learning rate. Notably, the third argument of the build function should actually be a learning rate scheduler. When provided with a float as in our example, it is automatically transformed into a constant learning rate scheduler. The learning rate is not part of the optimizer config as it is often done in other frameworks, but rather passed as a parameter when executing the optimizer step. This avoids having to mutate the state of the optimizer and is therefore more functional. It makes no difference when using the learner struct, but it will be an essential nuance to grasp if you implement your own training loop.

Once the learner is created, we can simply call fit and provide the training and validation

dataloaders. For the sake of simplicity in this example, we employ the test set as the validation

set; however, we do not recommend this practice for actual usage.

Finally, the trained model is returned by the fit method. The trained weights are then saved using

the CompactRecorder. This recorder employs the MessagePack format with half precision, f16 for

floats and i16 for integers. Other recorders are available, offering support for various formats,

such as BinCode and JSON, with or without compression. Any backend, regardless of precision, can

load recorded data of any kind.

Backend

We have effectively written most of the necessary code to train our model. However, we have not

explicitly designated the backend to be used at any point. This will be defined in the main

entrypoint of our program, namely the main function defined in src/main.rs.

use burn::optim::AdamConfig;

use burn::backend::{Autodiff, Wgpu, wgpu::AutoGraphicsApi};

use crate::model::ModelConfig;

fn main() {

type MyBackend = Wgpu<AutoGraphicsApi, f32, i32>;

type MyAutodiffBackend = Autodiff<MyBackend>;

let device = burn::backend::wgpu::WgpuDevice::default();

crate::training::train::<MyAutodiffBackend>(

"/tmp/guide",

crate::training::TrainingConfig::new(ModelConfig::new(10, 512), AdamConfig::new()),

device,

);

}In this example, we use the Wgpu backend which is compatible with any operating system and will

use the GPU. For other options, see the Burn README. This backend type takes the graphics api, the

float type and the int type as generic arguments that will be used during the training. By leaving

the graphics API as AutoGraphicsApi, it should automatically use an API available on your machine.

The autodiff backend is simply the same backend, wrapped within the Autodiff struct which imparts

differentiability to any backend.

We call the train function defined earlier with a directory for artifacts, the configuration of

the model (the number of digit classes is 10 and the hidden dimension is 512), the optimizer

configuration which in our case will be the default Adam configuration, and the device which can be

obtained from the backend.

You can now train your freshly created model with the command:

cargo run --release

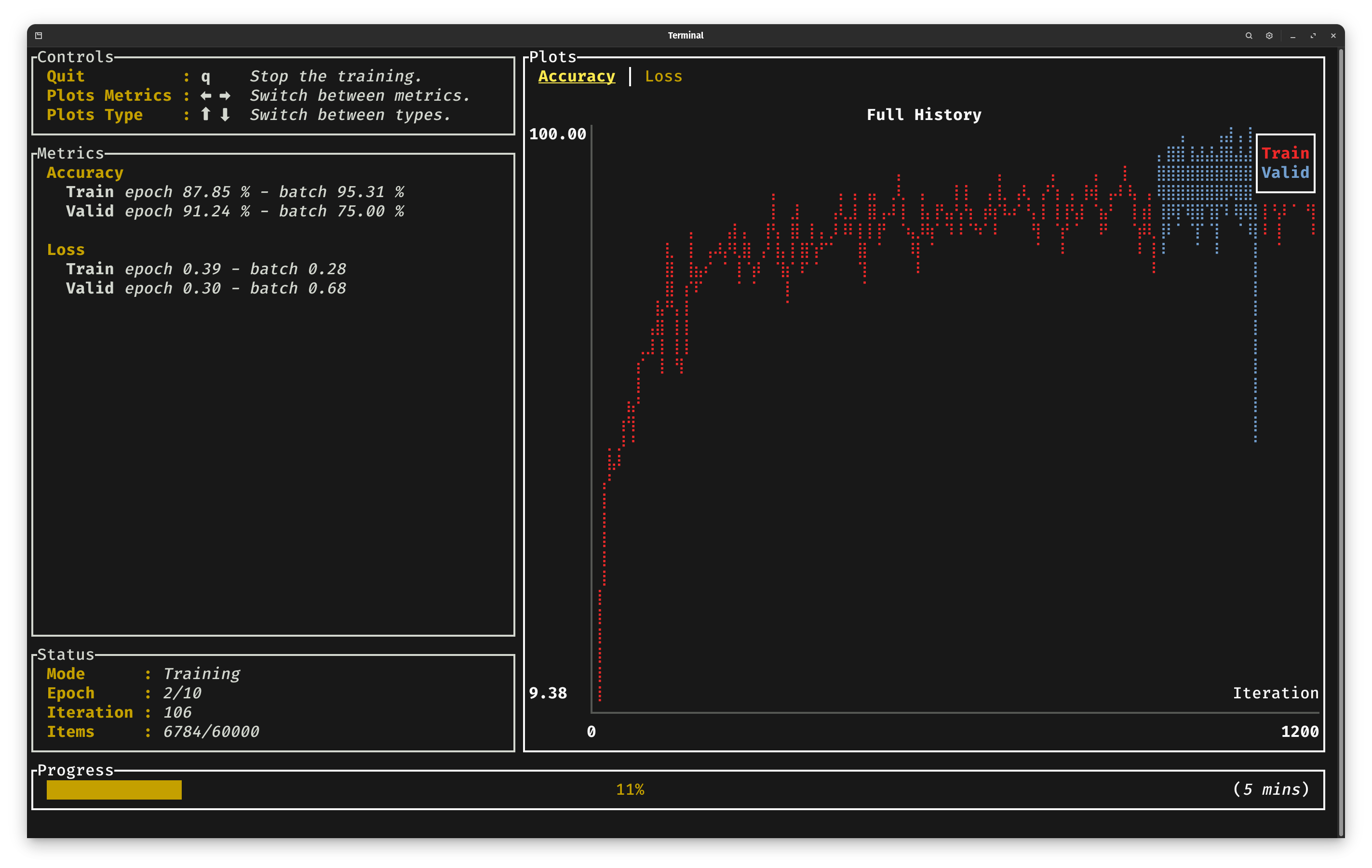

When running the example, you should see the training progression through a basic CLI dashboard:

Inference

Now that we have trained our model, the next natural step is to use it for inference.

You need two things in order to load weights for a model: the model's record and the model's config.

Since parameters in Burn are lazy initialized, no allocation and GPU/CPU kernels are executed by the

ModelConfig::init function. The weights are initialized when used for the first time, therefore

you can safely use config.init(device).load_record(record) without any meaningful performance

cost. Let's create a simple infer method in a new file src/inference.rs which we will use to

load our trained model.

pub fn infer<B: Backend>(artifact_dir: &str, device: B::Device, item: MnistItem) {

let config = TrainingConfig::load(format!("{artifact_dir}/config.json"))

.expect("Config should exist for the model");

let record = CompactRecorder::new()

.load(format!("{artifact_dir}/model").into(), &device)

.expect("Trained model should exist");

let model = config.model.init::<B>(&device).load_record(record);

let label = item.label;

let batcher = MnistBatcher::new(device);

let batch = batcher.batch(vec![item]);

let output = model.forward(batch.images);

let predicted = output.argmax(1).flatten::<1>(0, 1).into_scalar();

println!("Predicted {} Expected {}", predicted, label);

}The first step is to load the configuration of the training to fetch the correct model configuration. Then we can fetch the record using the same recorder as we used during training. Finally we can init the model with the configuration and the record. For simplicity we can use the same batcher used during the training to pass from a MnistItem to a tensor.

By running the infer function, you should see the predictions of your model!

Add the call to infer to the main.rs file after the train function call:

crate::inference::infer::<MyBackend>(

artifact_dir,

device,

burn::data::dataset::vision::MnistDataset::test()

.get(42)

.unwrap(),

);The number 42 is the index of the image in the MNIST dataset. You can explore and verify them using

this MNIST viewer.

Conclusion

In this short guide, we've introduced you to the fundamental building blocks for getting started with Burn. While there's still plenty to explore, our goal has been to provide you with the essential knowledge to kickstart your productivity within the framework.

Building Blocks

In this section, we'll guide you through the core elements that make up Burn. We'll walk you through the key components that serve as the building blocks of the framework and your future projects.

As you explore Burn, you might notice that we occasionally draw comparisons to PyTorch. We believe it can provide a smoother learning curve and help you grasp the nuances more effectively.

Backend

Nearly everything in Burn is based on the Backend trait, which enables you to run tensor

operations using different implementations without having to modify your code. While a backend may

not necessarily have autodiff capabilities, the AutodiffBackend trait specifies when autodiff is

needed. This trait not only abstracts operations but also tensor, device, and element types,

providing each backend the flexibility they need. It's worth noting that the trait assumes eager

mode since burn fully supports dynamic graphs. However, we may create another API to assist with

integrating graph-based backends, without requiring any changes to the user's code.

Users are not expected to directly use the backend trait methods, as it is primarily designed with backend developers in mind rather than Burn users. Therefore, most Burn userland APIs are generic across backends. This approach helps users discover the API more organically with proper autocomplete and documentation.

Tensor

As previously explained in the model section, the Tensor struct has 3 generic arguments: the backend B, the dimensionality D, and the data type.

Tensor<B, D> // Float tensor (default)

Tensor<B, D, Float> // Explicit float tensor

Tensor<B, D, Int> // Int tensor

Tensor<B, D, Bool> // Bool tensorNote that the specific element types used for Float, Int, and Bool tensors are defined by

backend implementations.

Burn Tensors are defined by the number of dimensions D in its declaration as opposed to its shape. The actual shape of the tensor is inferred from its initialization. For example, a Tensor of size (5,) is initialized as below:

let floats = [1.0, 2.0, 3.0, 4.0, 5.0];

// Get the default device

let device = Default::default();

// correct: Tensor is 1-Dimensional with 5 elements

let tensor_1 = Tensor::<Backend, 1>::from_floats(floats, &device);

// incorrect: let tensor_1 = Tensor::<Backend, 5>::from_floats(floats, &device);

// this will lead to an error and is for creating a 5-D tensorInitialization

Burn Tensors are primarily initialized using the from_data() method which takes the Data struct

as input. The Data struct has two fields: value & shape. To retrieve the data from a tensor, the

method .to_data() should be employed when intending to reuse the tensor afterward. Alternatively,

.into_data() is recommended for one-time use. Let's look at a couple of examples for initializing

a tensor from different inputs.

// Initialization from a given Backend (Wgpu)

let tensor_1 = Tensor::<Wgpu, 1>::from_data([1.0, 2.0, 3.0], &device);

// Initialization from a generic Backend

let tensor_2 = Tensor::<Backend, 1>::from_data(Data::from([1.0, 2.0, 3.0]).convert(), &device);

// Initialization using from_floats (Recommended for f32 ElementType)

// Will be converted to Data internally.

// `.convert()` not needed as from_floats() defined for fixed ElementType

let tensor_3 = Tensor::<Backend, 1>::from_floats([1.0, 2.0, 3.0], &device);

// Initialization of Int Tensor from array slices

let arr: [i32; 6] = [1, 2, 3, 4, 5, 6];

let tensor_4 = Tensor::<Backend, 1, Int>::from_data(Data::from(&arr[0..3]).convert(), &device);

// Initialization from a custom type

struct BodyMetrics {

age: i8,

height: i16,

weight: f32

}

let bmi = BodyMetrics{

age: 25,

height: 180,

weight: 80.0

};

let data = Data::from([bmi.age as f32, bmi.height as f32, bmi.weight]).convert();

let tensor_5 = Tensor::<Backend, 1>::from_data(data, &device);

The .convert() method for Data struct is called to ensure that the data's primitive type is

consistent across all backends. With .from_floats() method the ElementType is fixed as f32 and

therefore no convert operation is required across backends. This operation can also be done at

element wise level as:

let tensor_6 = Tensor::<B, 1, Int>::from_data(Data::from([(item.age as i64).elem()]). The

ElementConversion trait however needs to be imported for the element wise operation.

Ownership and Cloning

Almost all Burn operations take ownership of the input tensors. Therefore, reusing a tensor multiple times will necessitate cloning it. Let's look at an example to understand the ownership rules and cloning better. Suppose we want to do a simple min-max normalization of an input tensor.

let input = Tensor::<Wgpu, 1>::from_floats([1.0, 2.0, 3.0, 4.0], &device);

let min = input.min();

let max = input.max();

let input = (input - min).div(max - min);With PyTorch tensors, the above code would work as expected. However, Rust's strict ownership rules

will give an error and prevent using the input tensor after the first .min() operation. The

ownership of the input tensor is transferred to the variable min and the input tensor is no longer

available for further operations. Burn Tensors like most complex primitives do not implement the

Copy trait and therefore have to be cloned explicitly. Now let's rewrite a working example of

doing min-max normalization with cloning.

let input = Tensor::<Wgpu, 1>::from_floats([1.0, 2.0, 3.0, 4.0], &device);

let min = input.clone().min();

let max = input.clone().max();

let input = (input.clone() - min.clone()).div(max - min);

println!("{:?}", input.to_data());// Success: [0.0, 0.33333334, 0.6666667, 1.0]

// Notice that max, min have been moved in last operation so

// the below print will give an error.

// If we want to use them for further operations,

// they will need to be cloned in similar fashion.

// println!("{:?}", min.to_data());We don't need to be worried about memory overhead because with cloning, the tensor's buffer isn't copied, and only a reference to it is increased. This makes it possible to determine exactly how many times a tensor is used, which is very convenient for reusing tensor buffers or even fusing operations into a single kernel (burn-fusion). For that reason, we don't provide explicit inplace operations. If a tensor is used only one time, inplace operations will always be used when available.

Tensor Operations

Normally with PyTorch, explicit inplace operations aren't supported during the backward pass, making them useful only for data preprocessing or inference-only model implementations. With Burn, you can focus more on what the model should do, rather than on how to do it. We take the responsibility of making your code run as fast as possible during training as well as inference. The same principles apply to broadcasting; all operations support broadcasting unless specified otherwise.

Here, we provide a list of all supported operations along with their PyTorch equivalents. Note that for the sake of simplicity, we ignore type signatures. For more details, refer to the full documentation.

Basic Operations

Those operations are available for all tensor kinds: Int, Float, and Bool.

| Burn | PyTorch Equivalent |

|---|---|

Tensor::cat(tensors, dim) | torch.cat(tensors, dim) |

Tensor::empty(shape, device) | torch.empty(shape, device=device) |

Tensor::from_primitive(primitive) | N/A |

Tensor::stack(tensors, dim) | torch.stack(tensors, dim) |

tensor.all() | tensor.all() |

tensor.all_dim(dim) | tensor.all(dim) |

tensor.any() | tensor.any() |

tensor.any_dim(dim) | tensor.any(dim) |

tensor.chunk(num_chunks, dim) | tensor.chunk(num_chunks, dim) |

tensor.device() | tensor.device |

tensor.dims() | tensor.size() |

tensor.equal(other) | x == y |

tensor.expand(shape) | tensor.expand(shape) |

tensor.flatten(start_dim, end_dim) | tensor.flatten(start_dim, end_dim) |

tensor.flip(axes) | tensor.flip(axes) |

tensor.into_data() | N/A |

tensor.into_primitive() | N/A |

tensor.into_scalar() | tensor.item() |

tensor.narrow(dim, start, length) | tensor.narrow(dim, start, length) |

tensor.not_equal(other) | x != y |

tensor.permute(axes) | tensor.permute(axes) |

tensor.repeat(2, 4) | tensor.repeat([1, 1, 4]) |

tensor.reshape(shape) | tensor.view(shape) |

tensor.shape() | tensor.shape |

tensor.slice(ranges) | tensor[(*ranges,)] |

tensor.slice_assign(ranges, values) | tensor[(*ranges,)] = values |

tensor.squeeze(dim) | tensor.squeeze(dim) |

tensor.to_data() | N/A |

tensor.to_device(device) | tensor.to(device) |

tensor.unsqueeze() | tensor.unsqueeze(0) |

tensor.unsqueeze_dim(dim) | tensor.unsqueeze(dim) |

Numeric Operations

Those operations are available for numeric tensor kinds: Float and Int.

| Burn | PyTorch Equivalent |

|---|---|

Tensor::eye(size, device) | torch.eye(size, device=device) |

Tensor::full(shape, fill_value, device) | torch.full(shape, fill_value, device=device) |

Tensor::ones(shape, device) | torch.ones(shape, device=device) |

Tensor::zeros(shape) | torch.zeros(shape) |

Tensor::zeros(shape, device) | torch.zeros(shape, device=device) |

tensor.abs() | torch.abs(tensor) |

tensor.add(other) or tensor + other | tensor + other |

tensor.add_scalar(scalar) or tensor + scalar | tensor + scalar |

tensor.all_close(other, atol, rtol) | torch.allclose(tensor, other, atol, rtol) |

tensor.argmax(dim) | tensor.argmax(dim) |

tensor.argmin(dim) | tensor.argmin(dim) |

tensor.argsort(dim) | tensor.argsort(dim) |

tensor.argsort_descending(dim) | tensor.argsort(dim, descending=True) |

tensor.bool() | tensor.bool() |

tensor.clamp(min, max) | torch.clamp(tensor, min=min, max=max) |

tensor.clamp_max(max) | torch.clamp(tensor, max=max) |

tensor.clamp_min(min) | torch.clamp(tensor, min=min) |

tensor.div(other) or tensor / other | tensor / other |

tensor.div_scalar(scalar) or tensor / scalar | tensor / scalar |

tensor.equal_elem(other) | tensor.eq(other) |

tensor.gather(dim, indices) | torch.gather(tensor, dim, indices) |

tensor.greater(other) | tensor.gt(other) |

tensor.greater_elem(scalar) | tensor.gt(scalar) |

tensor.greater_equal(other) | tensor.ge(other) |

tensor.greater_equal_elem(scalar) | tensor.ge(scalar) |

tensor.is_close(other, atol, rtol) | torch.isclose(tensor, other, atol, rtol) |

tensor.lower(other) | tensor.lt(other) |

tensor.lower_elem(scalar) | tensor.lt(scalar) |

tensor.lower_equal(other) | tensor.le(other) |

tensor.lower_equal_elem(scalar) | tensor.le(scalar) |

tensor.mask_fill(mask, value) | tensor.masked_fill(mask, value) |

tensor.mask_where(mask, value_tensor) | torch.where(mask, value_tensor, tensor) |

tensor.max() | tensor.max() |

tensor.max_dim(dim) | tensor.max(dim, keepdim=True) |

tensor.max_dim_with_indices(dim) | N/A |

tensor.max_pair(other) | torch.Tensor.max(a,b) |

tensor.mean() | tensor.mean() |

tensor.mean_dim(dim) | tensor.mean(dim, keepdim=True) |

tensor.min() | tensor.min() |

tensor.min_dim(dim) | tensor.min(dim, keepdim=True) |

tensor.min_dim_with_indices(dim) | N/A |

tensor.min_pair(other) | torch.Tensor.min(a,b) |

tensor.mul(other) or tensor * other | tensor * other |

tensor.mul_scalar(scalar) or tensor * scalar | tensor * scalar |

tensor.neg() or -tensor | -tensor |

tensor.not_equal_elem(scalar) | tensor.ne(scalar) |

tensor.pad(pads, value) | torch.nn.functional.pad(input, pad, value) |

tensor.powf(other) or tensor.powi(intother) | tensor.pow(other) |

tensor.powf_scalar(scalar) or tensor.powi_scalar(intscalar) | tensor.pow(scalar) |

tensor.prod() | tensor.prod() |

tensor.prod_dim(dim) | tensor.prod(dim, keepdim=True) |

tensor.scatter(dim, indices, values) | tensor.scatter_add(dim, indices, values) |

tensor.select(dim, indices) | tensor.index_select(dim, indices) |

tensor.select_assign(dim, indices, values) | N/A |

tensor.sign() | tensor.sign() |

tensor.sort(dim) | tensor.sort(dim).values |

tensor.sort_descending(dim) | tensor.sort(dim, descending=True).values |

tensor.sort_descending_with_indices(dim) | tensor.sort(dim, descending=True) |

tensor.sort_with_indices(dim) | tensor.sort(dim) |

tensor.sub(other) or tensor - other | tensor - other |

tensor.sub_scalar(scalar) or tensor - scalar | tensor - scalar |

tensor.sum() | tensor.sum() |

tensor.sum_dim(dim) | tensor.sum(dim, keepdim=True) |

tensor.topk(k, dim) | tensor.topk(k, dim).values |

tensor.topk_with_indices(k, dim) | tensor.topk(k, dim) |

tensor.tril(diagonal) | torch.tril(tensor, diagonal) |

tensor.triu(diagonal) | torch.triu(tensor, diagonal) |

Float Operations

Those operations are only available for Float tensors.

| Burn API | PyTorch Equivalent |

|---|---|

tensor.cos() | tensor.cos() |

tensor.erf() | tensor.erf() |

tensor.exp() | tensor.exp() |

tensor.from_floats(floats, device) | N/A |

tensor.from_full_precision(tensor) | N/A |

tensor.int() | Similar to tensor.to(torch.long) |

tensor.log() | tensor.log() |

tensor.log1p() | tensor.log1p() |

tensor.matmul(other) | tensor.matmul(other) |

tensor.one_hot(index, num_classes, device) | N/A |

tensor.ones_like() | torch.ones_like(tensor) |

tensor.random(shape, distribution, device) | N/A |

tensor.random_like(distribution) | torch.rand_like() only uniform |

tensor.recip() | tensor.reciprocal() |

tensor.sin() | tensor.sin() |

tensor.sqrt() | tensor.sqrt() |

tensor.swap_dims(dim1, dim2) | tensor.transpose(dim1, dim2) |

tensor.tanh() | tensor.tanh() |

tensor.to_full_precision() | tensor.to(torch.float) |

tensor.transpose() | tensor.T |

tensor.var(dim) | tensor.var(dim) |

tensor.var_bias(dim) | N/A |

tensor.var_mean(dim) | N/A |

tensor.var_mean_bias(dim) | N/A |

tensor.zeros_like() | torch.zeros_like(tensor) |

Int Operations

Those operations are only available for Int tensors.

| Burn API | PyTorch Equivalent |

|---|---|

tensor.arange(5..10, device) | tensor.arange(start=5, end=10, device=device) |

tensor.arange_step(5..10, 2, device) | tensor.arange(start=5, end=10, step=2, device=device) |

tensor.float() | tensor.to(torch.float) |

tensor.from_ints(ints) | N/A |

tensor.int_random(shape, distribution, device) | N/A |

Bool Operations

Those operations are only available for Bool tensors.

| Burn API | PyTorch Equivalent |

|---|---|

Tensor.diag_mask(shape, diagonal) | N/A |

Tensor.tril_mask(shape, diagonal) | N/A |

Tensor.triu_mask(shape, diagonal) | N/A |

tensor.argwhere() | tensor.argwhere() |

tensor.float() | tensor.to(torch.float) |

tensor.int() | tensor.to(torch.long) |

tensor.nonzero() | tensor.nonzero(as_tuple=True) |

tensor.not() | tensor.logical_not() |

Activation Functions

| Burn API | PyTorch Equivalent |

|---|---|

activation::gelu(tensor) | nn.functional.gelu(tensor) |

activation::leaky_relu(tensor, negative_slope) | nn.functional.leaky_relu(tensor, negative_slope) |

activation::log_sigmoid(tensor) | nn.functional.log_sigmoid(tensor) |

activation::log_softmax(tensor, dim) | nn.functional.log_softmax(tensor, dim) |

activation::mish(tensor) | nn.functional.mish(tensor) |

activation::prelu(tensor,alpha) | nn.functional.prelu(tensor,weight) |

activation::quiet_softmax(tensor, dim) | nn.functional.quiet_softmax(tensor, dim) |

activation::relu(tensor) | nn.functional.relu(tensor) |

activation::sigmoid(tensor) | nn.functional.sigmoid(tensor) |

activation::silu(tensor) | nn.functional.silu(tensor) |

activation::softmax(tensor, dim) | nn.functional.softmax(tensor, dim) |

activation::softplus(tensor, beta) | nn.functional.softplus(tensor, beta) |

activation::tanh(tensor) | nn.functional.tanh(tensor) |

Autodiff

Burn's tensor also supports autodifferentiation, which is an essential part of any deep learning

framework. We introduced the Backend trait in the previous section, but Burn also

has another trait for autodiff: AutodiffBackend.

However, not all tensors support auto-differentiation; you need a backend that implements both the

Backend and AutodiffBackend traits. Fortunately, you can add autodifferentiation capabilities to any

backend using a backend decorator: type MyAutodiffBackend = Autodiff<MyBackend>. This

decorator implements both the AutodiffBackend and Backend traits by maintaining a dynamic

computational graph and utilizing the inner backend to execute tensor operations.

The AutodiffBackend trait adds new operations on float tensors that can't be called otherwise. It also

provides a new associated type, B::Gradients, where each calculated gradient resides.

fn calculate_gradients<B: AutodiffBackend>(tensor: Tensor<B, 2>) -> B::Gradients {

let mut gradients = tensor.clone().backward();

let tensor_grad = tensor.grad(&gradients); // get

let tensor_grad = tensor.grad_remove(&mut gradients); // pop

gradients

}Note that some functions will always be available even if the backend doesn't implement the

AutodiffBackend trait. In such cases, those functions will do nothing.

| Burn API | PyTorch Equivalent |

|---|---|

tensor.detach() | tensor.detach() |

tensor.require_grad() | tensor.requires_grad() |

tensor.is_require_grad() | tensor.requires_grad |

tensor.set_require_grad(require_grad) | tensor.requires_grad(False) |

However, you're unlikely to make any mistakes since you can't call backward on a tensor that is on

a backend that doesn't implement AutodiffBackend. Additionally, you can't retrieve the gradient of a

tensor without an autodiff backend.

Difference with PyTorch

The way Burn handles gradients is different from PyTorch. First, when calling backward, each

parameter doesn't have its grad field updated. Instead, the backward pass returns all the

calculated gradients in a container. This approach offers numerous benefits, such as the ability to

easily send gradients to other threads.

You can also retrieve the gradient for a specific parameter using the grad method on a tensor.

Since this method takes the gradients as input, it's hard to forget to call backward beforehand.

Note that sometimes, using grad_remove can improve performance by allowing inplace operations.

In PyTorch, when you don't need gradients for inference or validation, you typically need to scope your code using a block.

# Inference mode

torch.inference():

# your code

...

# Or no grad

torch.no_grad():

# your code

...

With Burn, you don't need to wrap the backend with the Autodiff for inference, and you

can call inner() to obtain the inner tensor, which is useful for validation.

/// Use `B: AutodiffBackend`

fn example_validation<B: AutodiffBackend>(tensor: Tensor<B, 2>) {

let inner_tensor: Tensor<B::InnerBackend, 2> = tensor.inner();

let _ = inner_tensor + 5;

}

/// Use `B: Backend`

fn example_inference<B: Backend>(tensor: Tensor<B, 2>) {

let _ = tensor + 5;

...

}Gradients with Optimizers

We've seen how gradients can be used with tensors, but the process is a bit different when working

with optimizers from burn-core. To work with the Module trait, a translation step is required to

link tensor parameters with their gradients. This step is necessary to easily support gradient

accumulation and training on multiple devices, where each module can be forked and run on different

devices in parallel. We'll explore deeper into this topic in the Module section.

Module

The Module derive allows you to create your own neural network modules, similar to PyTorch. The

derive function only generates the necessary methods to essentially act as a parameter container for

your type, it makes no assumptions about how the forward pass is declared.

use burn::module::Module;

use burn::tensor::backend::Backend;

#[derive(Module, Debug)]

pub struct PositionWiseFeedForward<B: Backend> {

linear_inner: Linear<B>,

linear_outer: Linear<B>,

dropout: Dropout,

gelu: Gelu,

}

impl<B: Backend> PositionWiseFeedForward<B> {

/// Normal method added to a struct.

pub fn forward<const D: usize>(&self, input: Tensor<B, D>) -> Tensor<B, D> {

let x = self.linear_inner.forward(input);

let x = self.gelu.forward(x);

let x = self.dropout.forward(x);

self.linear_outer.forward(x)

}

}Note that all fields declared in the struct must also implement the Module trait.

Tensor

If you want to create your own module that contains tensors, and not just other modules defined with

the Module derive, you need to be careful to achieve the behavior you want.

-

Param<Tensor<B, D>>: If you want the tensor to be included as a parameter of your modules, you need to wrap the tensor in aParamstruct. This will create an ID that will be used to identify this parameter. This is essential when performing module optimization and when saving states such as optimizer and module checkpoints. Note that a module's record only contains parameters. -

Param<Tensor<B, D>>.set_require_grad(false): If you want the tensor to be included as a parameter of your modules, and therefore saved with the module's weights, but you don't want it to be updated by the optimizer. -

Tensor<B, D>: If you want the tensor to act as a constant that can be recreated when instantiating a module. This can be useful when generating sinusoidal embeddings, for example.

Methods

These methods are available for all modules.

| Burn API | PyTorch Equivalent |

|---|---|

module.devices() | N/A |

module.fork(device) | Similar to module.to(device).detach() |

module.to_device(device) | module.to(device) |

module.no_grad() | module.require_grad_(False) |

module.num_params() | N/A |

module.visit(visitor) | N/A |

module.map(mapper) | N/A |

module.into_record() | Similar to state_dict |

module.load_record(record) | Similar to load_state_dict(state_dict) |

module.save_file(file_path, recorder) | N/A |

module.load_file(file_path, recorder) | N/A |

Similar to the backend trait, there is also the AutodiffModule trait to signify a module with

autodiff support.

| Burn API | PyTorch Equivalent |

|---|---|

module.valid() | module.eval() |

Visitor & Mapper

As mentioned earlier, modules primarily function as parameter containers. Therefore, we naturally offer several ways to perform functions on each parameter. This is distinct from PyTorch, where extending module functionalities is not as straightforward.

The map and visitor methods are quite similar but serve different purposes. Mapping is used for

potentially mutable operations where each parameter of a module can be updated to a new value. In

Burn, optimizers are essentially just sophisticated module mappers. Visitors, on the other hand, are

used when you don't intend to modify the module but need to retrieve specific information from it,

such as the number of parameters or a list of devices in use.

You can implement your own mapper or visitor by implementing these simple traits:

/// Module visitor trait.

pub trait ModuleVisitor<B: Backend> {

/// Visit a tensor in the module.

fn visit<const D: usize>(&mut self, id: &ParamId, tensor: &Tensor<B, D>);

}

/// Module mapper trait.

pub trait ModuleMapper<B: Backend> {

/// Map a tensor in the module.

fn map<const D: usize>(&mut self, id: &ParamId, tensor: Tensor<B, D>) -> Tensor<B, D>;

}Built-in Modules

Burn comes with built-in modules that you can use to build your own modules.

General

| Burn API | PyTorch Equivalent |

|---|---|

BatchNorm | nn.BatchNorm1d, nn.BatchNorm2d etc. |

Dropout | nn.Dropout |

Embedding | nn.Embedding |

Gelu | nn.Gelu |

GroupNorm | nn.GroupNorm |

InstanceNorm | nn.InstanceNorm1d, nn.InstanceNorm2d etc. |

LayerNorm | nn.LayerNorm |

LeakyRelu | nn.LeakyReLU |

Linear | nn.Linear |

Prelu | nn.PReLu |

Relu | nn.ReLU |

RmsNorm | No direct equivalent |

SwiGlu | No direct equivalent |

Convolutions

| Burn API | PyTorch Equivalent |

|---|---|

Conv1d | nn.Conv1d |

Conv2d | nn.Conv2d |

ConvTranspose1d | nn.ConvTranspose1d |

ConvTranspose2d | nn.ConvTranspose2d |

Pooling

| Burn API | PyTorch Equivalent |

|---|---|

AdaptiveAvgPool1d | nn.AdaptiveAvgPool1d |

AdaptiveAvgPool2d | nn.AdaptiveAvgPool2d |

AvgPool1d | nn.AvgPool1d |

AvgPool2d | nn.AvgPool2d |

MaxPool1d | nn.MaxPool1d |

MaxPool2d | nn.MaxPool2d |

RNNs

| Burn API | PyTorch Equivalent |

|---|---|

Gru | nn.GRU |

Lstm | nn.LSTM |

GateController | No direct equivalent |

Transformer

| Burn API | PyTorch Equivalent |

|---|---|

MultiHeadAttention | nn.MultiheadAttention |

TransformerDecoder | nn.TransformerDecoder |

TransformerEncoder | nn.TransformerEncoder |

PositionalEncoding | No direct equivalent |

RotaryEncoding | No direct equivalent |

Loss

| Burn API | PyTorch Equivalent |

|---|---|

CrossEntropyLoss | nn.CrossEntropyLoss |

MseLoss | nn.MSELoss |

HuberLoss | nn.HuberLoss |

Learner

The burn-train crate encapsulates multiple

utilities for training deep learning models. The goal of the crate is to provide users with a

well-crafted and flexible training loop, so that projects do not have to write such components from

the ground up. Most of the interactions with burn-train will be with the LearnerBuilder struct,

briefly presented in the previous training section. This struct

enables you to configure the training loop, offering support for registering metrics, enabling

logging, checkpointing states, using multiple devices, and so on.

There are still some assumptions in the current provided APIs, which may make them inappropriate for your learning requirements. Indeed, they assume your model will learn from a training dataset and be validated against another dataset. This is the most common paradigm, allowing users to do both supervised and unsupervised learning as well as fine-tuning. However, for more complex requirements, creating a custom training loop might be what you need.

Usage

The learner builder provides numerous options when it comes to configurations.

| Configuration | Description |

|---|---|

| Training Metric | Register a training metric |

| Validation Metric | Register a validation metric |

| Training Metric Plot | Register a training metric with plotting (requires the metric to be numeric) |

| Validation Metric Plot | Register a validation metric with plotting (requires the metric to be numeric) |

| Metric Logger | Configure the metric loggers (default is saving them to files) |

| Renderer | Configure how to render metrics (default is CLI) |

| Grad Accumulation | Configure the number of steps before applying gradients |

| File Checkpointer | Configure how the model, optimizer and scheduler states are saved |

| Num Epochs | Set the number of epochs. |

| Devices | Set the devices to be used |

| Checkpoint | Restart training from a checkpoint |

When the builder is configured at your liking, you can then move forward to build the learner. The build method requires three inputs: the model, the optimizer and the learning rate scheduler. Note that the latter can be a simple float if you want it to be constant during training.

The result will be a newly created Learner struct, which has only one method, the fit function

which must be called with the training and validation dataloaders. This will start the training and

return the trained model once finished.

Again, please refer to the training section for a relevant code snippet.

Artifacts

When creating a new builder, all the collected data will be saved under the directory provided as

the argument to the new method. Here is an example of the data layout for a model recorded using

the compressed message pack format, with the accuracy and loss metrics registered:

├── experiment.log

├── checkpoint

│ ├── model-1.mpk.gz

│ ├── optim-1.mpk.gz

│ └── scheduler-1.mpk.gz

│ ├── model-2.mpk.gz

│ ├── optim-2.mpk.gz

│ └── scheduler-2.mpk.gz

├── train

│ ├── epoch-1

│ │ ├── Accuracy.log

│ │ └── Loss.log

│ └── epoch-2

│ ├── Accuracy.log

│ └── Loss.log

└── valid

├── epoch-1

│ ├── Accuracy.log

│ └── Loss.log

└── epoch-2

├── Accuracy.log

└── Loss.log

You can choose to save or synchronize that local directory with a remote file system, if desired. The file checkpointer is capable of automatically deleting old checkpoints according to a specified configuration.

Metric

When working with the learner, you have the option to record metrics that will be monitored throughout the training process. We currently offer a restricted range of metrics.

| Metric | Description |

|---|---|

| Accuracy | Calculate the accuracy in percentage |

| Loss | Output the loss used for the backward pass |

| CPU Temperature | Fetch the temperature of CPUs |

| CPU Usage | Fetch the CPU utilization |

| CPU Memory Usage | Fetch the CPU RAM usage |

| GPU Temperature | Fetch the GPU temperature |

| Learning Rate | Fetch the current learning rate for each optimizer step |

| CUDA | Fetch general CUDA metrics such as utilization |

In order to use a metric, the output of your training step has to implement the Adaptor trait from

burn-train::metric. Here is an example for the classification output, already provided with the

crate.

/// Simple classification output adapted for multiple metrics.

#[derive(new)]

pub struct ClassificationOutput<B: Backend> {

/// The loss.

pub loss: Tensor<B, 1>,

/// The output.

pub output: Tensor<B, 2>,

/// The targets.

pub targets: Tensor<B, 1, Int>,

}

impl<B: Backend> Adaptor<AccuracyInput<B>> for ClassificationOutput<B> {

fn adapt(&self) -> AccuracyInput<B> {

AccuracyInput::new(self.output.clone(), self.targets.clone())

}

}

impl<B: Backend> Adaptor<LossInput<B>> for ClassificationOutput<B> {

fn adapt(&self) -> LossInput<B> {

LossInput::new(self.loss.clone())

}

}Custom Metric

Generating your own custom metrics is done by implementing the Metric trait.

/// Metric trait.

///

/// Implementations should define their own input type only used by the metric.

/// This is important since some conflict may happen when the model output is adapted for each

/// metric's input type.

///

/// The only exception is for metrics that don't need any input, setting the associated type

/// to the null type `()`.

pub trait Metric: Send + Sync {

/// The input type of the metric.

type Input;

/// Updates the metric state and returns the current metric entry.

fn update(&mut self, item: &Self::Input, metadata: &MetricMetadata) -> MetricEntry;

/// Clear the metric state.

fn clear(&mut self);

}As an example, let's see how the loss metric is implemented.

/// The loss metric.

#[derive(Default)]

pub struct LossMetric<B: Backend> {

state: NumericMetricState,

_b: B,

}

/// The loss metric input type.

#[derive(new)]

pub struct LossInput<B: Backend> {

tensor: Tensor<B, 1>,

}

impl<B: Backend> Metric for LossMetric<B> {